After Cross-Validation Predict: A Comprehensive Guide

Understanding the intricacies of cross-validation and its role in predictive modeling is crucial for any data scientist or machine learning enthusiast. Once you’ve successfully navigated the cross-validation process, the next step is to make predictions. This article will delve into the various aspects of making predictions after cross-validation, ensuring you have a thorough understanding of the process.

What is Cross-Validation?

Cross-validation is a technique used to assess how well a predictive model will generalize to an independent data set. It involves partitioning the original sample into two subsets: a training set to train the model and a validation set to test the model’s performance. This process is repeated multiple times, each time with a different partitioning of the data, to ensure that the model’s performance is not dependent on a particular partitioning of the data.

Types of Cross-Validation

There are several types of cross-validation methods, each with its own advantages and disadvantages. The most common types include:

-

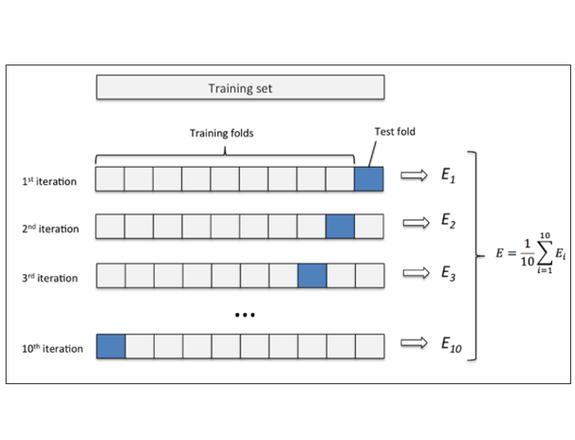

K-fold Cross-Validation: The data is divided into K equally sized subsets. The model is trained on K-1 subsets and validated on the remaining subset. This process is repeated K times, with each subset used exactly once as the validation data.

-

Leave-One-Out Cross-Validation: This method is similar to K-fold cross-validation, but with K equal to the number of observations. Each observation is used exactly once as the validation data, and the remaining observations are used as the training data.

-

Stratified K-fold Cross-Validation: This method is similar to K-fold cross-validation, but with the additional constraint that each fold must contain approximately the same percentage of samples of each target class as the complete set.

After Cross-Validation: Making Predictions

Once you’ve completed the cross-validation process and have a model that performs well on the validation set, it’s time to make predictions. Here are some key considerations to keep in mind:

1. Preparing the Test Set

Before making predictions, you need to ensure that the test set is properly prepared. This involves:

-

Handling Missing Values: If there are any missing values in the test set, you’ll need to decide how to handle them. Common techniques include imputation, deletion, or using a model to predict the missing values.

-

Feature Scaling: If you applied feature scaling during the training phase, you’ll need to apply the same scaling to the test set to ensure consistency.

-

Handling Categorical Variables: If you used one-hot encoding or label encoding for categorical variables during the training phase, you’ll need to apply the same encoding to the test set.

2. Using the Model for Prediction

Once the test set is prepared, you can use the trained model to make predictions. Here’s a step-by-step guide:

-

Load the trained model.

-

Preprocess the test set as described in the previous section.

-

Make predictions using the trained model.

-

Store the predictions in a separate file or database.

3. Evaluating the Predictions

After making predictions, it’s important to evaluate the model’s performance on the test set. This can be done using various evaluation metrics, such as:

-

Accuracy: The proportion of correctly classified instances.

-

Precision: The proportion of true positives out of the total predicted positives.

-

Recall: The proportion of true positives out of the total actual positives.

-

F1 Score: The harmonic mean of precision and recall.

4. Iterating and Improving

Once you’ve evaluated the model’s performance, you may want to iterate and improve the model. This can involve:

-

Feature Engineering: Creating new features or modifying existing features to improve the model’s performance.